After months of speculation, Google revealed some information(on April 4th, 2012 to be specific)

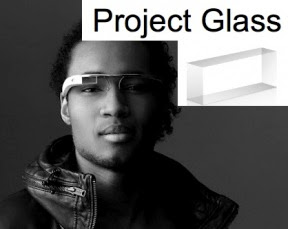

After months of speculation, Google revealed some information(on April 4th, 2012 to be specific)about the Project Glass. Instead of using a smartphone to find information about an object, translate a text, get directions, compare prices, you can use smart glasses that augment the reality and help you understand more about things around you.

Since, it has been more than a week, I had a good chance to read and analyze most of the views several Tech blogs and experts shared about this project. Here are few more details, about Project Glass and with whom my opinion synced well.

Google's concept glasses have a:

b) Microphone,

c) 3G/4G data connection (to send and receive data in real time) and

d) Number of sensors including motion and GPS.

One of the people who used the glasses said that "they let technology get out of your way. If I want to take a picture I don't have to reach into my pocket and take out my phone; I just press a button at the top of the glasses and that's it."

New York Times reported that "the glasses [could] go on sale to the public by the end of the year. (...) The people familiar with the Google glasses said they would be Android-based, and will include a small screen that will sit a few inches from someone's eye. Seth Weintraub found that "the navigation system currently used is a head tilting-to scroll and click, (...) I/O on the glasses will also include voice input and output, and we are told the CPU/RAM/storage hardware is near the equivalent of a generation-old Android smartphone".

As per Techcrunch, Apple and Facebook should get together and compete with Google on this, since Project Glass takes a ton of the things you use your iPhone and iPod for and puts them into your glasses. The glasses will likely run a version of Android and since they’re voice controlled(Google’s competitor to Siri). People might buy Google glasses rather than snapping up the latest Apple device.

But Apple is the world’s greatest hardware company and it should seek to capitalize on Google’s lack of hardware experience, and spend some of its cash reserves to lock up critical component manufacturers. This technology sure seems like the future, so Apple needs to be ready to pounce. But the problem remains that it has no social network or other key services to power its own version…

Facebook Should Team Up With Apple

Not having its own mobile OS or device is hurting Facebook, and eyeglass computing could turn into round two. The video already showed Google+ as the preferred sharing method. Unlike an Android phone where you could just open the Facebook app, Project Glass won’t necessarily allow third-party apps, at least at first, and could make them harder to access than Google+ which will be baked in.

Apple needs somewhere to share the content you’d create with its glasses and Facebook needs to make sure Apple lets it get deeply embedded, with or without Twitter alongside it.

Postscript: If Apple or Facebook consider eyeglass computing as marketable to mainstream in the next few years, today they should be terrified Of Google Glasses and this should give them a jolt.

Techcrunch also reported this may end up being called Google Eye.

Here's a promising video that shows why and how the glasses could be helpful:

Google’s augmented Project Glass is going to disrupt your business model. If you don’t even have to pull your phone out to take a photo, get directions, or message with friends, why would you need to buy the latest iPhone or spend so much time on Facebook?

Kevin Tofel @ GigaOM, reported Google glasses make sense as the “next” mobile device and here’s why I agree with him “We have gone from immobile desktops to portable laptops and now we are toting tablets and pocketable smartphones. Where can we go from here if not to the growing number of connected, wearable gadgets. As silly as the idea may look or sound to some, I find merit in the approach, as it seems like a logical next step.”

From a consumer perspective, Project Glass also forwards another theme that has been growing. Touchable user interfaces have reinvented how we use mobile devices, but hardware design is advancing to the point where the interfaces are starting to disappear. Instead of holding a tablet, people are interacting directly with an app, Web page, photo or other digital object in a reduced interface, with either voice or minute gestures. In essence, such glasses would allow people to digitally interact with the physical world around them without a device or user interface getting in the way.

From a consumer perspective, Project Glass also forwards another theme that has been growing. Touchable user interfaces have reinvented how we use mobile devices, but hardware design is advancing to the point where the interfaces are starting to disappear. Instead of holding a tablet, people are interacting directly with an app, Web page, photo or other digital object in a reduced interface, with either voice or minute gestures. In essence, such glasses would allow people to digitally interact with the physical world around them without a device or user interface getting in the way.It could be a year before these eyewear reach stores, but that’s why these and other tech companies need to strategize now. If they wait to see if the device is a hit, the world could be seeing through Google-tinted glasses by the time they adapt. It will be interesting to see if Google will actually sell these smart glasses. There are a lot of issues that need to be solved before releasing a commercial product: from battery life to packaging so much technology in a such a small product, from improving Google Goggles to handling real-time video streaming.

Comments

Post a Comment